Avinash Kaushik is a Big Deal in marketing circles. He’s not as big as our Top 15 Marketing Masters of the Digital World, but few are. Take my word for it–Avinash knows marketing and a lot of people follow him. He’s the Digital Marketing Evangelist for Google among other things, and he writes a blog called Occam’s Razor.

That’s ironic in a sense when you consider what Occam’s Razor is:

His principle states that among competing hypotheses, the one with the fewest assumptions should be selected.

In this case, Avinash is waxing eloquent about Artificial Intelligence. Like many others, he has seen the demos, come away impressed, drank the Koolaid, and concluded that we are pretty much doomed. The AI Robotic Overlords are going to be running the world, taking all the jobs, and there is little we can do about it except to find the silver lining in those very dark clouds. In fact, it’s going to happen very soon, very quickly, and it will be much like the transition from Camels to Cars in Saudi Arabia.

In light of what Occam’s Razor means, I think it is ironic that he jumps to those conclusions, though as I mentioned, he is far from the first.

Avinash, it seems, saw a demonstration at Google (demos are SO powerful!) of manipulator arms learning how to select and grasp objects all by themselves. AI has crashed and burned in the past, and there are at least 2 identifiable bubbles so far, but Avinash offers a variety of arguments for why this time will be different.

Among his arguments are:

- We now have Continuous Learning.

- We have Complete Day One Knowledge.

- Computers only need to be 100,000 times faster to equal the power of our brains, and that goal is within sight due to Moore’s Law.

Here’s some video of Google’s Robotic Arms doing their learning:

OK, I admit, these robots are no Colossus of the Forbin Project candidates. And they couldn’t Open the Pod Bay Doors even if they wanted to.

Before I launch into my reaction to these all to common predictions that AI is right around the corner and will take all of our jobs, let me establish my own credentials. Hey, anyone can have an opinion, but like everyone else, I think my opinion is better!

I have worked in what many would call the field of Artificial Intelligence. I made the largest return I’ve ever made selling one of my 6 Venture Capital Startups to another company. The technology we built was able to automatically test software.

It would crawl the User Interface of a program, then it would use Machine Learning to automatically generate optimal test scripts for the software. The company was called Integrity QA, and eventually the product made its way through a series of acquisitions to IBM. It’s still available and is called IBM Rational Test Factory.

IBM Rational Test Factory…

Pretty cool, right?

In fact, the product uses an exotic Artificial Intelligence method known as Genetic Algorithms. To this day I make a very tidy living by creating software that’s capable of learning and solving important real-life problems in CNC Manufacturing.

Side story: when I was raising money for this startup, I walked into a meeting at a VC firm and learned they had invited Dr John R. Koza, one of the foremost experts on Genetic Algorithms.

I didn’t think anything of it (entrepreneurs are nothing if not sure of themselves!), but Koza told the assembled VC’s he didn’t think my idea would work. He couldn’t cite any facts or logic, it was just his gut feeling. Needless to say, they didn’t invest, but they sure were impressed when we had it working 10 months later and had gotten an offer of $45 million to buy the company!

This stuff is very powerful and useful in real situations, but I know what goes on behind the scenes and I will be the first to tell you–it’s not artificially intelligent.

I think the whole AI thing is thoroughly over-hyped for marketing purposes, and it is making it’s third run on the Gartner Hype Cycle. In fact, it’s nearing the crest and ready to plummet into the Trough of Disillusionment soon.

In fact, Gartner pretty much agrees with that assessment and produced a Hype Cycle for AI this year:

Gartner lists the following being at the Peak of Inflated Expectations:

- Deep Neural Network ASICs

- Level 3 Vehicle Autonomy

- Smart Robots

- Virtual Assistants

- Deep Learning

- Machine Learning

- NLP

- Autonomous Vehicles

- Intelligent Apps

- Cognitive Computing

- Computer Vision

- Level 4 Vehicle Autonomy

- Commercial UAVs (Drones)

That’s right–their view is that all of those things are way over hyped. They do hedge their bets a bit by saying:

The risk of ignoring potentially transformational AI exceeds the mitigated risk of fast, early failure.

With that background, and I hope you will read Avinash’s blog post extolling AI, here’s my reaction to his post and all the AI hype:

Can AI’s smart as a Cockroach’s really take your job?

Step right up and board the roller coaster of Artificial Intelligence!

You don’t want to miss this one folks. AI has been through the hype cycle twice before but this time it’s different. There will be no troughs of disillusionment or other scary drops because we now have even more amazing demos!

Yes, that’s right—demos!!!

Over here are robotic arms that can select objects from a pan, and they learned how to do this all by themselves. Wow!

Never mind that Terry Winograd had robots picking out objects in the last AI Bubble before the bottom fell out, this is new, New, NEW!

IBM’s chess master-beating Deep Blue…

The demos have always been great for AI. Even the old AI from Bubbles Burst in Bygone Days we had:

- Medical diagnosis better than what human doctors could do. See Mycin for prescribing antibiotics, for example. It was claimed to be better than human doctors at its job but never saw actual use.

- All manner of vision and manipulation. Blocks? So what. Driving cars? Yeah right. Turn ‘em loose against a New York cabbie and we’ll see how they do. The challenge for autonomous vehicles has always been the people, not the terrain.

No matter how many autonomous cars drive across the dessert (talk about the easiest possible terrain), they’re nowhere until they can deal with stupid carbon units, i.e. People, without killing them or creating liability through property damage.

By the way, despite awarding numerous prizes of one million dollars and up, so far the DARPA Grand Challenge has failed to meet the goal Congress set for it when it awarded funding–to get 1/3 of all military vehicles to be autonomous by 2015. But the demos sure are sweet!

- Computers have been solving mathematical theorems for ages. In some cases they even generate better proofs than the humans. Cool. But if they’re so good, why haven’t they already pushed mathematics ahead by centuries? Something is not quite right with a demo that can only solve theorems already solved and little else.

- Oooh, yeah, computers are beating chess masters! Sure, but not in any way that remotely resembles how people play chess. They are simply able to consider more positions. That and the fact that their style of play is just odd and offputing to humans is why they win. What good is it? One source claims Deep Blue cost IBM $100 million.

When are those algorithms doing to genuinely add $1 billion to IBM’s bottom line? Building still more specialized computers to beat humans at Jeapardy or Go is just creating more demos that solve no useful problems and do so in ways that humans don’t. Show me the AI System that starts from nothing and can learn to beat any human at any game in less than a year and I will admit I have seen Deep Skynet.

There’ve been a lot of great AI demos over the years. So far every single one has been highly specialized, didn’t work remotely as human minds do, and didn’t wind up launching giant industries that ate up everyone’s jobs.

There’ve been a lot of very smart people, experts in the field of AI and not just gushing reporters and futurists, who’ve proclaimed it’s right around the corner. And they’ve been wrong countless times.

Most all of them have claimed it’s just a matter of computer power. And we keep getting more and more of that. But it’s far from clear that AI has really gotten that much smarter.

Avanish, says we just need 100,000 times faster computers. Woo hoo!

And many are predicting exactly when the singularity will happen based on that. Avanish says computers get 10 times faster every 4 years so it’ll only take 25 years to get computers 100,000 times faster.

Whoa there big fella!

Moore’s law actually says they get twice as fast every 2 years. So after 6 years, they are 8 times faster, not 10x in 5 years.

Will Robby be here in 34 years?

It’s going to take more like 34 years to get there. And there’s a LOT of assumptions behind that:

What if Moore’s Law doesn’t hold?

It hasn’t been easy keeping up, and nobody thinks it will go on forever. Yet we need it to continue another 34 years without slowing down just to have a shot at this AI thing.

It’s also become harder and harder to harness that power and put it to use too. I’ve written before about the multicore crisis and how PC’s quit getting much faster as far as the average user could see.

The days when you upgraded your machine every 2 years because it was so much faster are over. Here’s what happened to clock speeds that I call a picture of the multicore crisis:

Way back in 2006, clock speeds plateu’d and quit improving. Now we get more cores instead of faster cores. What that means is that software has to become increasingly complex before we can take advantage of more cores. Largely, that hasn’t materialized on the desktop, because it’s hard.

BTW, just being able to build a computer powerful enough that takes a giant data center to house doesn’t mean it will make sense to use that computer to take over minimum wage jobs.

It’s an expensive piece of machinery for many more years before it becomes economical. We can build one in 34 years and then how many more years until we can fit one cheaply on a desktop? 10 years? 20 years?

What if neurons in the brain are significantly more powerful than we think?

There is credible evidence, for example, to suggest that the brain may be capable of quantum computing on some level. The Atlantic has a particularly approachable article on this theory.

If the brain really is a quantum computer, that is going to change the math of getting to the power of a human mind quite a lot. We’re way behind at creating quantum computers on that scale, the early work is promising. It could well take 20 to 30 years to be able to tame quantum computing for conventional applications and we’ll still have to figure out how to employ and scale them for AI applications

What about the software?

The first 2 AI hype cycles failed because we had chosen essentially the wrong architectures.

First it was logic and exhaustive search, then it was expert systems and knowledge representation. Now we think it is neural networks, and because they can learn, we’ll just let them learn and not worry too much about software.

But there’s a whole lot more that we know about humans and animals now that isn’t addressed by that model. How are instincts programmed in? What’s the role of emotion and hormones and how are they implemented?

Think of every hard question having to do with why human minds do what they do. Think of how intractable mental illness is simply because we are largely clueless.

Isn’t it hubris thinking we just need more powerful neural nets and we are there? You bet it is.

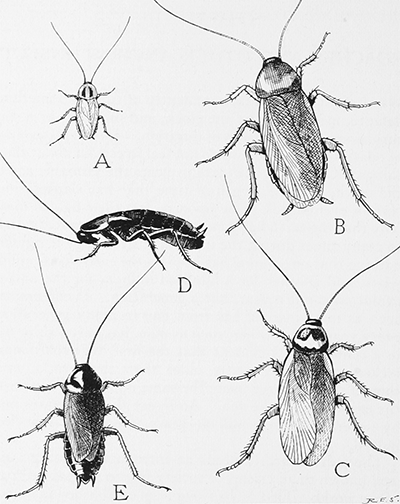

Could a cockroach do your job?

Artificial Cockroaches

So we need artificial brains that are 100,000 times more powerful. In essence, we can compare today’s AI to brains the size of what cockroaches have. Yet, we’re worried they’re going to take all of our jobs.

Are you in a job that a cockroach could do? I hope not.

So far, I am not aware of anyone having harnessed cockroaches to do their bidding, but they are cheap, plentiful, and just as smart as today’s AI’s. Maybe smarter if their brains are quantum computers too.

Maybe it would be cheaper to spend billions learning how to make cockroaches useful?

I don’t know, but we don’t even seem to be able to make much smarter animals useful. Are there dogs running machinery somewhere in China? Is a particularly adept German Shepherd behind the latest quant trading engine on Wall Street?

Nope.

Be sure you jump off the bubble before it bursts!

Get ready for the trough of disillusionment for AI, people. It isn’t going to take your jobs away. In fact, quite the opposite will happen. Just as has happened in previous AI bubbles, Ai jobs are going to go away.

That’s how the third AI Bubble will end. But take heart, AI aficionados. There will inevitably be a fourth AI Bubble. It will happen before the 34 years brings us powerful enough machines, but it will be close enough to that epoch to inflate expectations.

It will burst too, because we’ll blow right through the creation of 100,000x more powerful machines and be left wondering why they didn’t work.

Why won’t the machines talk to us?

My theory about that, is the machines are going to be much like Helen Keller. She was an extremely bright and gifted person. Yet because she was blind and deaf, she couldn’t communicate for a long time.

People are not very patient. We will eventually create artificially intelligent machines, but at least a few generations of them will be killed before we realize they really did work simply because they didn’t communicate well enough to convince us in time.

The first AIs will not be gifted super intellects. It’s hubris to think they would be. In fact, they will likely be extremely limited–nothing like Helen Keller.

PS: What about Continous Learning and Day One Knowledge?

These are supposed to be game changers. Continuous Learning means we can harness a bunch of robots to pool their knowledge as they tirelessly learn 24×7. Google had a row of robotic arms learning to manipulate blocks collectively, and they managed to do it in 3,000 robot hours of practice.

Day One Knowledge suggests that since this is all algorithms and data, it is somehow instantly transferable. The robots will make each other smarter and never forget anything.

Here’s the thing: people have been doing continuous learning with day one knowledge for a long time. That’s the purpose of language, reading, writing, the Scientific Method, and civilization in general.

It’s the machines that are late to the party while human progress has been accelerating. If not for our ability to collaborate and build on each other’s knowledge, we wouldn’t be talking about AI machines at all.

With all that said, I do love a good robot. In fact, I plan to build one soon. It won’t be artificially intelligent, but it will be very good at a fantastically fun and useful human task!

[ Avinash Responds: See the Follow-Up ]

If this article resonated and you'd like more just like it, sign up for our Entrepreneur's Newsletter. You'll receive a free mini-course Work Smarter and Get Things Done. It teaches you how to maximize your productivity so you can get everything you need to do for your business done. It even includes our free productivity software to get you organized, focused, and productive.